OpenAI is in the middle of a mental health crisis.

One of the company’s top safety researchers, Andrea Vallone, will be leaving the company at the end of the year, according to WIRED. Vallone was reportedly a part of shaping how ChatGPT responds to users experiencing mental health crises.

According to data released by OpenAI last month, roughly three million ChatGPT users display signs of serious mental health emergencies like emotional reliance on AI, psychosis, mania, and self-harm, with roughly more than a million users talking to the chatbot about suicide every week.

Examples of such cases have been widely reported in media throughout this year. Dubbed “AI psychosis” in online circles, some frequent AI chatbot users have been shown to exhibit dysfunctional delusions, hallucinations, and disordered thinking, like a 60-something-year-old user who reported to the FTC that ChatGPT had led them to believe they were being targeted for assassination, or a community of Reddit users claiming to have fallen in love with their chatbots.

Some of these cases have led to hospitalizations, and others have been fatal. ChatGPT was even allegedly linked to a murder-suicide in Connecticut.

The American Psychological Association has been warning the FTC about the inherent risks of AI chatbots being used as unlicensed therapists since February.

What finally got the company to take public action was a wrongful death lawsuit filed against OpenAI earlier this year by the parents of 16-year-old Adam Raine. According to the filing, Raine frequently used ChatGPT in the months leading up to his suicide, with the chatbot advising him on how to tie a noose and discouraging him from telling his parents about his suicidal ideation. Following the lawsuit, the company admitted that its safety guardrails degraded during longer user interactions.

The news of Vallone’s departure comes after months of piling mental health complaints by ChatGPT users and only a day after a sobering investigation by the New York Times. In the report, the Times paints a picture of an OpenAI that was well aware of the inherent mental health risks that came with addictive AI chatbot design, but still decided to pursue it.

“Training chatbots to engage with people and keep them coming back presented risks,” OpenAI’s former policy researcher Gretchen Krueger told the New York Times, adding that some harm to users “was not only foreseeable, it was foreseen.” Krueger left the company in the spring of 2024.

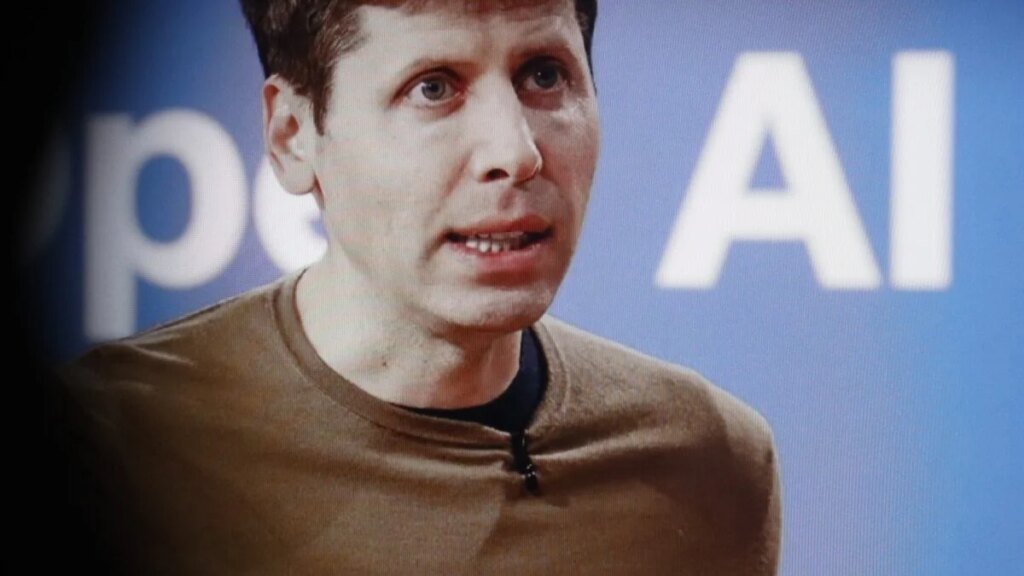

The concerns center mostly around a clash between OpenAI’s mission to increase daily chatbot users as an official for-profit, and its founding vision of a future where safe AI benefits humanity, one that it promised to follow as a former nonprofit.

Central to that discrepancy is GPT-4o, ChatGPT’s next-to-latest version released which was released in May of last year and drew significant ire over its sycophancy problem, aka its tendency to be a “yes man” to a fault. GPT-4o has been described as addictive, as users revolted when OpenAI switched it out with the less personable and fawning GPT-5 in August.

According to the Times report, the company’s Model Behavior team, responsible for the chatbot’s tone, created a Slack channel to discuss the problem of sycophancy before the model was released, but the company ultimately decided that performance metrics were more important.

After concerning cases started mounting, the company began working to combat the problem. OpenAI hired a psychiatrist full-time in March, the report says, and accelerated the development of sycophancy evaluations, the likes of which competitor Anthropic has had for years.

According to experts cited in the report, GPT-5 is better at detecting mental health issues but could not pick up on harmful patterns in long conversations.

The company has also begun nudging users to take a break when they are in long conversations (a measure that was recommended months earlier), and it introduced parental controls. OpenAI is also working on launching an age prediction system to automatically apply “age-appropriate settings” for users under 18 years old.

But, the head of ChatGPT, Nick Turley, reportedly told employees in October that the safer chatbot was not connecting with users and outlined goals to increase daily active users for ChatGPT by 5% by the end of this year.

Around that time, Altman announced that they would be relaxing some of the previous restrictions around the chatbots, namely that they would now have more personality (a la GPT-4o) and would allow “erotica for verified adults.”