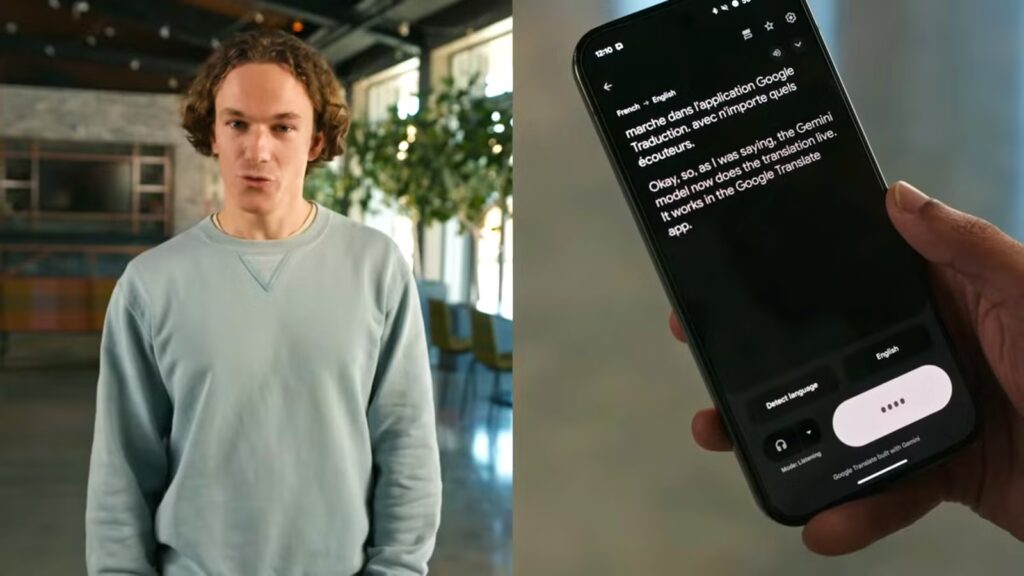

Google is rolling out a major update to its Gemini audio models, bringing powerful live speech-to-speech translation capabilities to the Google Translate app. This upgrade uses the improved Gemini 2.5 Flash Native Audio model, which is designed to handle complex voice interactions.

This new live speech translation feature is designed specifically for headphones, essentially letting you hear the world around you translated in real time. This beta experience is rolling out right now within the Google Translate app. If you’re traveling or just need to communicate across a language barrier, this is a feature that could really change how you interact with people who don’t speak your native language.

The functionality is split into two modes. First, there is continuous listening. This is perfect for situations like listening to a lecture or following a group conversation. The AI listens to several different languages at once and converts them all into the one language you understand. You just put your headphones in and hear the world translated directly. Second, there is a two-way conversation.

This handles real-time translation between two specific languages, and it automatically swaps languages on the fly depending on who is talking. For instance, if you speak English and the person across from you speaks Hindi, you hear English translations instantly in your headphones, and when you speak back, your phone broadcasts the Hindi translation.

The detail that really makes this feature stand out is called “style transfer.” This lets users hear the nuance of human speech. It mimics the speaker’s actual voice, matching their speed and tone so the translation doesn’t sound robotic. Beyond that, the system offers robust noise filtering, meaning you can still hold a comfortable conversation even if you are in a loud, outdoor environment.

The translation coverage is extensive, supporting over 70 languages and 2,000 language pairs. This wide support is thanks to blending Gemini’s audio processing power with its vast language database

Another key component is multilingual input and auto-detection. This lets the system understand multiple languages simultaneously in a single session. You do not need to mess with the settings, and you do not even need to know what language is being spoken to start translating. The app figures out the language on its own and starts translating.

Behind all this is the updated Gemini 2.5 Flash Native Audio model itself, which is also powering Google’s live voice agents across various products. Google has improved the model in three key technical areas that should result in snappier performance for those using the tools.

The model now has sharper function calling. This means the system is more reliable when it needs to connect to outside tools. For example, it can grab live data while you are talking without pausing or breaking the flow. Google reports a 90% adherence rate to developer instructions, which is up from 84% in previous versions.

Finally, the conversations themselves should be smoother. The model is remembering what you said earlier in the chat. This helps it stay on topic and feels less like a disjointed exchange. I would say this improvement in multi-turn conversation quality is what’s truly needed for stability in any voice assistant.

These improvements are not just for the Translate app. The new Gemini 2.5 Flash Native Audio is rolling out across Google products, including Google AI Studio, Vertex AI, Gemini Live, and Search Live. You can also expect more effective brainstorming sessions with Gemini Live or better real-time help in Search Live.

If you’d like to try out the live translation feature, the beta experience is rolling out starting today in the Google Translate app. You can connect your headphones to your device and tap “Live translate.” For now, this experience is available on Android devices in the US, Mexico, and India, with support for iOS and more regions coming soon.

Source: Google