This is The Stepback, a weekly newsletter breaking down one essential story from the tech world. For more on the legal morass of AI, follow Adi Robertson. The Stepback arrives in our subscribers’ inboxes at 8AM ET. Opt in for The Stepback here.

The song was called “Heart on My Sleeve,” and if you didn’t know better, you might guess you were hearing Drake. If you did know better, you were hearing the starting bell of a new legal and cultural battle: the fight over how AI services should be able to use people’s faces and voices, and how platforms should respond.

Back in 2023, the AI-generated faux-Drake track “Heart on My Sleeve” was a novelty; even so, the problems it presented were clear. The song’s close imitation of a major artist rattled musicians. Streaming services removed it on a copyright legal technicality. But the creator wasn’t making a direct copy of anything — just a very close imitation. So attention quickly turned to the separate area of likeness law. It’s a field that was once synonymous with celebrities going after unauthorized endorsements and parodies, and as audio and video deepfakes proliferated, it felt like one of the few tools available to regulate them.

Unlike copyright, which is governed by the Digital Millennium Copyright Act and multiple international treaties, there’s no federal law around likeness. It’s a patchwork of varying state laws, none of which were originally designed with AI in mind. But the past few years have seen a flurry of efforts to change that. In 2024, Tennessee Gov. Bill Lee and California Gov. Gavin Newsom — both of whose states rely heavily on their media industries — signed bills that expanded protections against unauthorized replicas of entertainers.

But law has predictably moved more slowly than tech. Last month OpenAI launched Sora, an AI video generation platform aimed specifically at capturing and remixing real people’s likenesses. It opened the floodgates to a torrent of often startlingly realistic deepfakes, including of people who didn’t consent to their creation. OpenAI and other companies are responding by implementing their own likeness policies — which, in the absence of anything else, could turn into the internet’s new rules of the road.

OpenAI has denied it was reckless launching Sora, with CEO Sam Altman claiming that if anything, it was “way too restrictive” with guardrails. Yet the service has still generated plenty of complaints. It launched with minimal restrictions on the likenesses of historical figures, only to reverse course after Martin Luther King Jr.’s estate complained about “disrespectful depictions” of the assassinated civil rights leader spewing racism or committing crimes. It touted careful restrictions on unauthorized use of living people’s likenesses, but users found ways around it to put celebrities like Bryan Cranston into Sora videos doing things like taking a selfie with Michael Jackson, leading to complaints from SAG-AFTRA that pushed OpenAI to strengthen guardrails in unspecified ways there too.

Even some people who did authorize Sora cameos (its word for a video using a person’s likeness) were unsettled by the results, including, for women, all kinds of fetish output. Altman said he hadn’t realized people might have “in-between” feelings about authorized likenesses, like not wanting a public cameo “to say offensive things or things that they find deeply problematic.”

Sora’s been addressing problems with changes like its tweak to the historical figures policy, but it’s not the only AI video service, and things are getting — in general — very weird. AI slop has become de rigueur for President Donald Trump’s administration and some other politicians, including gross or outright racist depictions of specific political enemies: Trump responded to last week’s No Kings protests with a video that showed him dropping shit on a person who resembled liberal influencer Harry Sisson, while New York City mayoral candidate Andrew Cuomo posted (and quickly deleted) a “criminals for Zohran Mamdani” video that showed his Democratic opponent gobbling handfuls of rice. As Kat Tenbarge chronicled in Spitfire News earlier this month, AI videos are becoming ammunition in influencer drama as well.

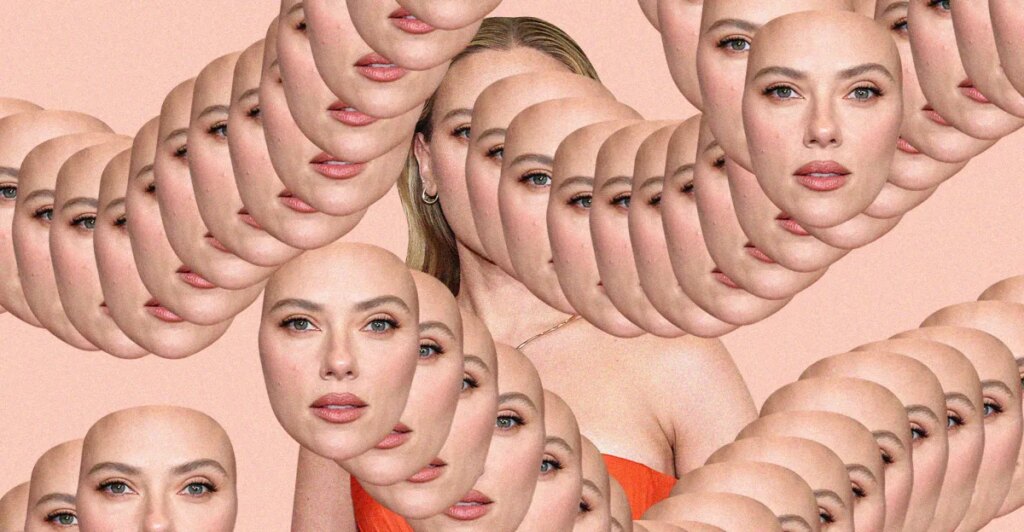

There’s an almost constant potential threat of legal action around unauthorized videos, as celebrities like Scarlett Johansson have lawyered up over use of their likeness. But unlike with AI copyright infringement allegations, which have generated numerous high-profile lawsuits and nearly constant deliberation inside regulatory agencies, few likeness incidents have escalated to that level — perhaps in part because the legal landscape is still in flux.

When SAG-AFTRA thanked OpenAI for changing Sora’s guardrails, it used the opportunity to promote the Nurture Originals, Foster Art, and Keep Entertainment Safe (NO FAKES) Act, a years-old attempt to codify protections against “unauthorized digital replicas.” The NO FAKES Act, which has also garnered support from YouTube, introduces nationwide rights to control the use of a “computer-generated, highly realistic electronic representation” of a living or dead person’s voice or visual likeness. It includes liability for online services that knowingly allow unauthorized digital replicas, too.

The NO FAKES Act has generated severe criticism from online free speech groups. The EFF dubbed it a “new censorship infrastructure” mandate that forces platforms to filter content so broadly it will almost inevitably lead to unintentional takedowns and a “heckler’s veto” online. The bill includes carveouts for parody, satire, and commentary that should be allowed even without authorization, but they’ll be “cold comfort for those who cannot afford to litigate the question,” the organization warned.

Opponents of the NO FAKES Act can take solace in how little legislation Congress manages to pass these days — we’re currently living through the second-longest federal government shutdown in history, and there’s even a separate push to block state AI regulation that could nullify new likeness laws. But pragmatically, likeness rules are still coming. Earlier this week YouTube announced it will let Partner Program creators search for unauthorized uploads using their likeness and request their removal. The move expands on existing policies that, among other things, let music industry partners take down content that “mimics an artist’s unique singing or rapping voice.”

And throughout all this, social norms are still evolving. We’re entering a world where you can easily generate a video of almost anyone doing almost anything — but when should you? In many cases, those expectations remain up for grabs.

- Most of this recent conversation is about AI videos of people doing simply weird or silly things, but historically, research indicates the overwhelming majority of deepfakes have been pornographic images of women, often made without consent. Beyond Sora there’s a whole different conversation about things like the output of AI nudify services, and the legal issues are similar to those concerning other nonconsensual sexual imagery.

- On top of the basic legal issue of when a likeness is unauthorized, there are also questions like when a video might be defamatory (if it’s sufficiently realistic) or harassing (if it’s part of a larger pattern of stalking and threats), which could make individual situations even more complicated.

- Social platforms are used to being almost always shielded from liability through Section 230, which says they can’t be treated as the publisher or speaker of third-party content. As more and more services take the active step of helping users generate content, how far Section 230 will shield the resulting images and video seems like a fascinating question.

- Despite long-standing fears that AI will make it truly impossible to distinguish phantasms from reality, it’s still often simple to use context and “tells” (from specific editing tics to obvious watermarks) to figure out whether a video was AI-generated. The problem is many people don’t look closely enough or simply don’t care if it’s fake.

- Sarah Jeong’s warning about seamlessly manipulated photographs is even more relevant now than it was when she published it in 2024.

- The New York Times has a comprehensive look at Trump’s particular affinity for AI-generated content.

- Max Read’s analysis of Sora as a social platform and whether it will “work.”

Follow topics and authors from this story to see more like this in your personalized homepage feed and to receive email updates.

- AIClose

AI

Posts from this topic will be added to your daily email digest and your homepage feed.

FollowFollow

See All AI

- ColumnClose

Column

Posts from this topic will be added to your daily email digest and your homepage feed.

FollowFollow

See All Column

- TechClose

Tech

Posts from this topic will be added to your daily email digest and your homepage feed.

FollowFollow

See All Tech

- The StepbackClose

The Stepback

Posts from this topic will be added to your daily email digest and your homepage feed.

FollowFollow

See All The Stepback